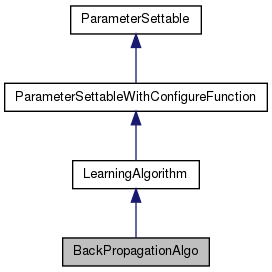

BackPropagationAlgo Class Reference

Back-Propagation Algorithm implementation. More...

Classes | |

| class | cluster_deltas |

| The struct of Clusters and Deltas. | |

Public Member Functions | |

| BackPropagationAlgo (NeuralNet *n_n, UpdatableList update_order, double l_r=0.1) | |

| Constructor. | |

| BackPropagationAlgo () | |

| Default Constructor. | |

| ~BackPropagationAlgo () | |

| Destructor. | |

| virtual double | calculateMSE (const Pattern &) |

| Calculate the Mean Square Error respect to Pattern passed. | |

| virtual void | configure (ConfigurationParameters ¶ms, QString prefix) |

| Configures the object using a ConfigurationParameters object. | |

| void | disableMomentum () |

| Disable momentum. | |

| void | enableMomentum () |

| Enable the momentum. | |

| DoubleVector | getError (Cluster *) |

| This method returns the deltas calculated by the Back-propagation Algorithm. | |

| virtual void | learn (const Pattern &) |

| Starts a single training step. | |

| virtual void | learn () |

| a single step of learning algorithm | |

| double | momentum () const |

| return the momentum | |

| double | rate () const |

| return the learning rate | |

| virtual void | save (ConfigurationParameters ¶ms, QString prefix) |

| Save the actual status of parameters into the ConfigurationParameters object passed. | |

| void | setMomentum (double newmom) |

| Set the momentum value. | |

| void | setRate (double newrate) |

| Set the learning rate. | |

| void | setTeachingInput (Cluster *output, const DoubleVector &ti) |

| Set the teaching input for Cluster passed. | |

| void | setUpdateOrder (const UpdatableList &update_order) |

| Set the order on which the error is backpropagated through the NeuralNet. | |

| UpdatableList | updateOrder () const |

| Return the order on which the error is backpropaget through the NeuralNet. | |

Static Public Member Functions | |

| static void | describe (QString type) |

| Add to Factory::typeDescriptions() the descriptions of all parameters and subgroups. | |

Protected Member Functions | |

| virtual void | neuralNetChanged () |

| Configure internal data for backpropagation on NeuralNet setted. | |

Detailed Description

Back-Propagation Algorithm implementation.

Definition at line 41 of file backpropagationalgo.h.

Constructor & Destructor Documentation

| BackPropagationAlgo | ( | NeuralNet * | n_n, |

| UpdatableList | update_order, | ||

| double | l_r = 0.1 |

||

| ) |

Constructor.

- Parameters:

-

neural_network the BaseNeuralNet neural network to train update_order the UpdatableList for the backpropagation sequence learn_rate the double learning rate factor

Definition at line 27 of file backpropagationalgo.cpp.

References BackPropagationAlgo::neuralNetChanged().

Default Constructor.

Definition at line 34 of file backpropagationalgo.cpp.

References BackPropagationAlgo::neuralNetChanged().

| ~BackPropagationAlgo | ( | ) |

Destructor.

Definition at line 41 of file backpropagationalgo.cpp.

Member Function Documentation

| double calculateMSE | ( | const Pattern & | pat | ) | [virtual] |

Calculate the Mean Square Error respect to Pattern passed.

Implements LearningAlgorithm.

Definition at line 188 of file backpropagationalgo.cpp.

References NeuralNet::inputClusters(), Pattern::inputsOf(), farsa::mse(), LearningAlgorithm::neuralNet(), NeuralNet::outputClusters(), Pattern::outputsOf(), and NeuralNet::step().

| void configure | ( | ConfigurationParameters & | params, |

| QString | prefix | ||

| ) | [virtual] |

Configures the object using a ConfigurationParameters object.

From the file you can configure the parameters of the BackPropagation in this way:

[aBackPropagationGroup] neuralnet = nameOfGroupOfTheNeuralNet rate = learningRate ;if it's not present, default is 0.0 !! momentum = momentumRate ;if it's not present, means momentum disabled order = cluster2 linker1 cluster1 ; order of Cluster and Linker on which the error is backpropagated

As you can note, there is no configuration parameters for loading the learning set from here. This is intended. You need to load separately the learning set and call the method learn on the loaded learning set.

You can do that creating groups like the following (see class Pattern):

[learningSet:1] cluster:1 = cluster1 inputs:1 = 1 2 3 ; input cluster:2 = cluster2 outputs:2 = 2 4 ; desired output [learningSet:2] cluster:1 = cluster1 inputs:1 = 2 4 6 ; input cluster:2 = cluster2 outputs:2 = 4 8 ; desired output ... [learningSet:N] cluster:1 = cluster1 inputs:1 = 10 20 30 ; input cluster:2 = cluster2 outputs:2 = 20 40 ; desired output

And call the method LearningAlgorithm::loadPatternSet( params, "learningSet" )

- Parameters:

-

params the configuration parameters object with parameters to use prefix the prefix to use to access the object configuration parameters. This is guaranteed to end with the separator character when called by the factory, so you don't need to add one

Implements ParameterSettableWithConfigureFunction.

Definition at line 241 of file backpropagationalgo.cpp.

References ConfigurationParameters::getObjectFromGroup(), ConfigurationParameters::getObjectFromParameter(), ConfigurationParameters::getValue(), LearningAlgorithm::setNeuralNet(), ConfigurationParameters::startRememberingGroupObjectAssociations(), and ConfigurationParameters::stopRememberingGroupObjectAssociations().

| void describe | ( | QString | type | ) | [static] |

Add to Factory::typeDescriptions() the descriptions of all parameters and subgroups.

Reimplemented from ParameterSettable.

Definition at line 280 of file backpropagationalgo.cpp.

References ParameterSettable::addTypeDescription(), ParameterSettable::RealDescriptor::def(), ParameterSettable::Descriptor::describeObject(), ParameterSettable::Descriptor::describeReal(), ParameterSettable::RealDescriptor::help(), ParameterSettable::ObjectDescriptor::help(), ParameterSettable::IsMandatory, ParameterSettable::RealDescriptor::limits(), ParameterSettable::ObjectDescriptor::props(), and ParameterSettable::ObjectDescriptor::type().

| void disableMomentum | ( | ) | [inline] |

Disable momentum.

Definition at line 103 of file backpropagationalgo.h.

| void enableMomentum | ( | ) |

Enable the momentum.

Definition at line 95 of file backpropagationalgo.cpp.

| DoubleVector getError | ( | Cluster * | cl | ) |

This method returns the deltas calculated by the Back-propagation Algorithm.

These deltas are set every time new targets are defined for the output layer(s), which are then used to update network weights when the method learn() is called.

They are also useful to calculate the network performance, but for that it must be used outside the learning cycle (a full learning iteration, that corresponds to present the network with all the patterns of the train data set). For that you must call getError( Cluster * anyOutputCluster ) for each line of your training set (you'll get a DoubleVector with the deltas for each unit of the cluster considered).

Then you can use those values to calculate your desired performance measure.

For instance: if you use it to calculate the Mean Square Error (MSE) of the network for your train data set you must accumulate the square of the the getError( anyOutputCluster ) output for each line of the set, and at the end divide it by the length of that same set (by definition the MSE is the sum of the squared differences between the target and actual output of a sequence of values). Getting the Root Mean Squared Error (RMSE) from this is trivial (you just need to calculate the square root of the MSE).

- Warning:

- The data returned by getError( Cluster * ) is computed every time you set a new output target, which means every time you call the setTeachingInput( Cluster * anyOutputCluster, const DoubleVector & teaching_input ) method. If your network has more than one output layer you have to call setTeachingInput() for all the output clusters before calling getError() for any of the clusters.

Definition at line 86 of file backpropagationalgo.cpp.

| void learn | ( | const Pattern & | pat | ) | [virtual] |

Starts a single training step.

Implements LearningAlgorithm.

Definition at line 172 of file backpropagationalgo.cpp.

References NeuralNet::inputClusters(), Pattern::inputsOf(), BackPropagationAlgo::learn(), LearningAlgorithm::neuralNet(), NeuralNet::outputClusters(), Pattern::outputsOf(), BackPropagationAlgo::setTeachingInput(), and NeuralNet::step().

| void learn | ( | ) | [virtual] |

a single step of learning algorithm

Implements LearningAlgorithm.

Definition at line 132 of file backpropagationalgo.cpp.

References farsa::deltarule().

Referenced by BackPropagationAlgo::learn().

| double momentum | ( | ) | const [inline] |

return the momentum

Definition at line 95 of file backpropagationalgo.h.

| void neuralNetChanged | ( | ) | [protected, virtual] |

Configure internal data for backpropagation on NeuralNet setted.

Implements LearningAlgorithm.

Definition at line 45 of file backpropagationalgo.cpp.

References LearningAlgorithm::neuralNet(), and NeuralNet::outputClusters().

Referenced by BackPropagationAlgo::BackPropagationAlgo(), and BackPropagationAlgo::setUpdateOrder().

| double rate | ( | ) | const [inline] |

return the learning rate

Definition at line 85 of file backpropagationalgo.h.

| void save | ( | ConfigurationParameters & | params, |

| QString | prefix | ||

| ) | [virtual] |

Save the actual status of parameters into the ConfigurationParameters object passed.

- Parameters:

-

params the configuration parameters object on which save actual parameters prefix the prefix to use to access the object configuration parameters.

Implements ParameterSettable.

Definition at line 264 of file backpropagationalgo.cpp.

References ConfigurationParameters::createParameter(), Updatable::name(), LearningAlgorithm::neuralNet(), NeuralNet::save(), and ConfigurationParameters::startObjectParameters().

| void setMomentum | ( | double | newmom | ) | [inline] |

Set the momentum value.

Definition at line 90 of file backpropagationalgo.h.

| void setRate | ( | double | newrate | ) | [inline] |

Set the learning rate.

Definition at line 80 of file backpropagationalgo.h.

| void setTeachingInput | ( | Cluster * | output, |

| const DoubleVector & | ti | ||

| ) |

Set the teaching input for Cluster passed.

- Parameters:

-

teach_input the DoubleVector teaching input

Definition at line 77 of file backpropagationalgo.cpp.

References Cluster::outputs(), and farsa::subtract().

Referenced by BackPropagationAlgo::learn().

| void setUpdateOrder | ( | const UpdatableList & | update_order | ) |

Set the order on which the error is backpropagated through the NeuralNet.

- Warning:

- Calling this method will also clean any previous data about previous processing and re-initialize all datas

Definition at line 72 of file backpropagationalgo.cpp.

References BackPropagationAlgo::neuralNetChanged().

| UpdatableList updateOrder | ( | ) | const [inline] |

Return the order on which the error is backpropaget through the NeuralNet.

Definition at line 63 of file backpropagationalgo.h.

The documentation for this class was generated from the following files:

- nnfw/include/backpropagationalgo.h

- nnfw/src/backpropagationalgo.cpp