Evolution of Object Manipulation Skills in Humanoid Robots

Gianluca Massera, Elio Tuci, Stefano Nolfi

1. Introduction and summary of the obtained results

The control of arm and hand movements in human and nonhuman primates is a fundamental research topic in cognitive sciences, neurosciences and robotics. Despite the importance of the topic, the large body of available behavioural and neuroscientific data, and the vast number of studies done, the issues of how primates and humans learn to display reaching and grasping behaviour still remains highly controversial. Moreover, whilst many of the aspects that make these problems difficult have been identified, experimental research based on different techniques does not seem to converge towards the identification of a general methodology for developing robots able to display effective objects' manipulation abilities.

In the studies reported below, we investigated whether humanoid robots can develop objects manipulation skills through an adaptive process in which the free parameters regulate the fine-grained interactions between the robot and the environment and in which variations of free parameters are retained or discarded on the basis of their effects on the overall ability of the robot to interact with the objects appropriately.

The obtained results demonstrate that this approach can lead to effective solutions. The analyses of the adapted individuals and of the pre-requisites for their development indicate that active perception and more generally the exploitation of the properties arising from the robot/environmental interactions play a key role. Moreover, they indicate that morphological aspects (e.g. the compliance of the joints in the fingers or the properties of muscle-like actuators) represent crucial pre-requisites for the development of effective solutions.

2. Evolution of prehension abilities

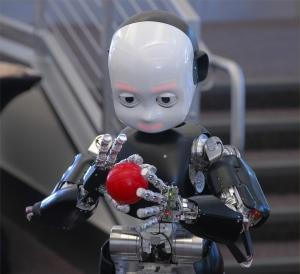

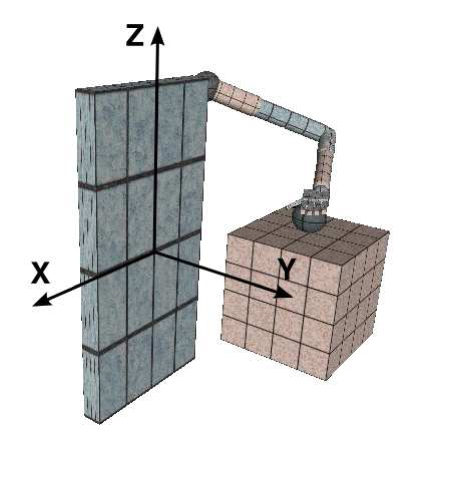

In a first series of experiment (Massera, Cangelosi & Nolfi, 2007) we trained an anthropomorphic robotic arm controlled by an artificial neural network to reach, grasp and lift objects lying over a table located in front of the robot (see Figure 1, left). The experiments have been carried out in simulation by using an accurate simulator of the I-Cub robot (Figure 1, right).

Figure 1. Left: The robot and the environment. Right: The I-Cub robot.

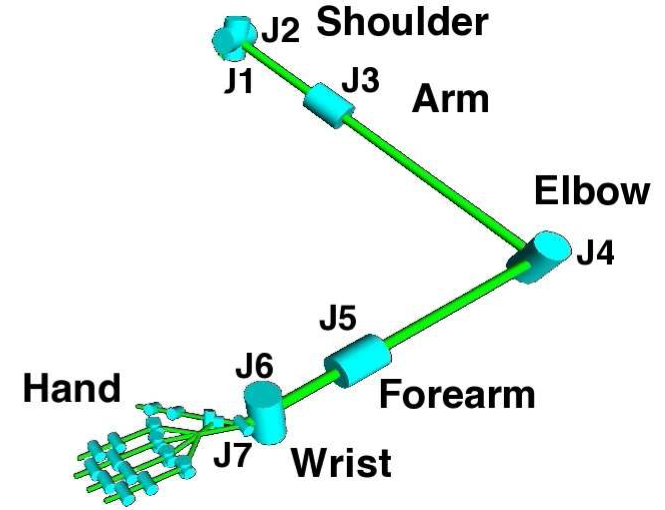

The robot is provided with an anthropomorphic robotic arm with 7 DOFs, a robotic hand with 20 DOFs, proprioceptive and touch sensors distributed within the arm and the hand and a simple vision system able to detect the relative position of the object (see Figure 2).

The 7 joints of the arm, that control the 7 corresponding DOFs, are actuated by 14 simulated antagonist muscles implemented accordingly to the Hill's muscle model. The 20 DOF of the hand, instead, are actuated through 2 velocity-proportional controllers that determine the extension/flexion of all the fingers and the supination/pronation of the thumb.

Seven arm propriosensors encode the current angles of the seven corresponding DOFs located on the arm and on the wrist. Five hand propriosensors encode the current extension/flexion state of the five corresponding fingers. Six tactile sensors detect whether the five fingers and the part constituted by the palm and wrist are in physical contact with another object. Finally, the three vision sensors encode the output of a vision system that computes the relative distance of the object with respect to the hand over three orthogonal axes.

Figure 2. The kinematic chain of the arm and of the hand (left and right, respectively). Cylinders represent rotational DoFs; the axes of cylinders indicate the corresponding axis of rotation; the links among cylinders represents the rigid connections that make up the arm structure. Ji with i = 1, .., 12 refer to the joints whose state is both sensed and set by the arm's controller. Ti with i = 1, .., 10 indicate the tactile sensors.

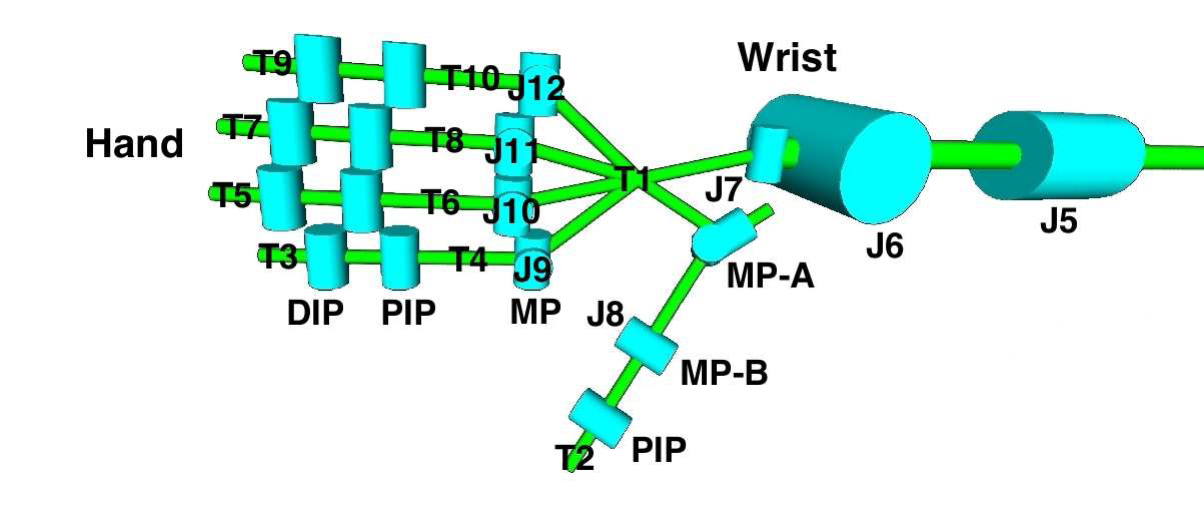

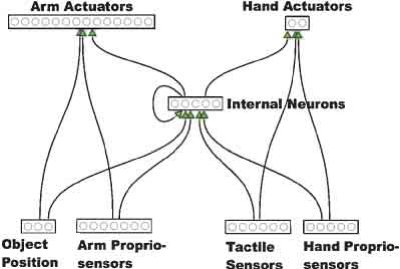

The architecture of the robots' neural controller includes 21 sensory neurons (that encode the current state of the corresponding sensors), five internal leaky neurons, and 16 motor neurons (that control the 16 corresponding actuators).

Figure 3. The architecture of the robots' neural controller. Arrows indicated blocks of fully connected neurons.

The connection weights, biases, and the time constant of internal neurons are encoded in free parameters and adapted through an evolutionary process. Individuals are tested for 18 trials with their arm set in different initial position and are evaluated on the basis of a two components fitness function that score them primarily for the ability to lift the object and, secondarily, for the ability to reach the object with their hand.

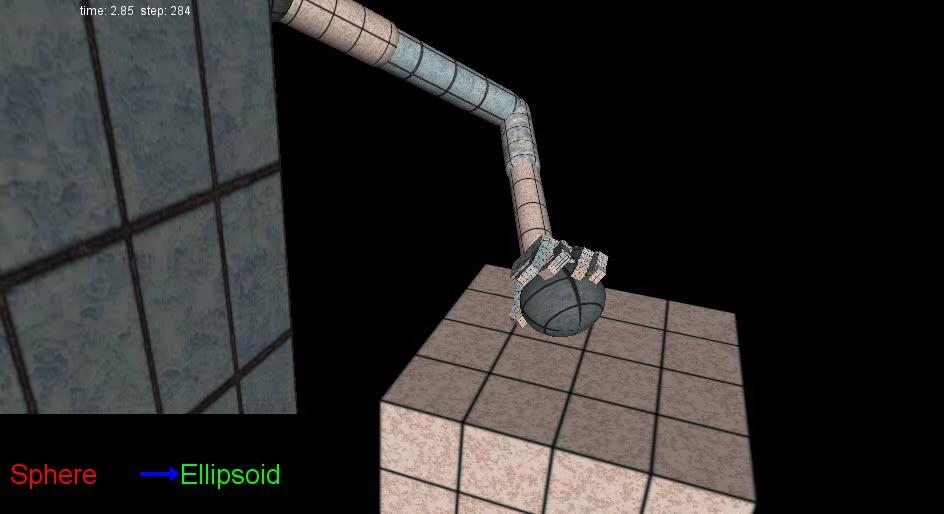

Figure 4.: Behaviour exhibited by two typical adapted individuals.

Figure 4 includes the videos of two typical adapted individuals. As can be seen the robot manage to successfully carry on their task. Adapted robots also display a good ability to generalize their skill to objects with different shape and size (for more details, see Massera, Cangelosi & Nolfi, 2007 and the supplementary material http://laral.istc.cnr.it/esm/arm-grasping/).

Notice how the adapted robots exploit the physical characteristics of their body and the properties arising from the interaction between their body and the physical environment to successfully carry on their task. More specifically, the robot exploit the compliance of their fingers to successfully grasp objects with different shapes and orientations thus avoiding the need to adapt the posture of their hand to the shape of the object before initiating the grasping behaviour. Moreover, the strategy used by the robot, that involves a first phase in which the robot approaches the object from the left side, a second phase it which it hits the object from the bottom-left side, and a third phase in which the robot moves its hand under the object and rotates it so to block the object itself from the right side, allows the robot to exploit the fact that after the first hit the object tend to move toward the palm of the hand thus facilitating the grasping process.

3. Active categorical perception

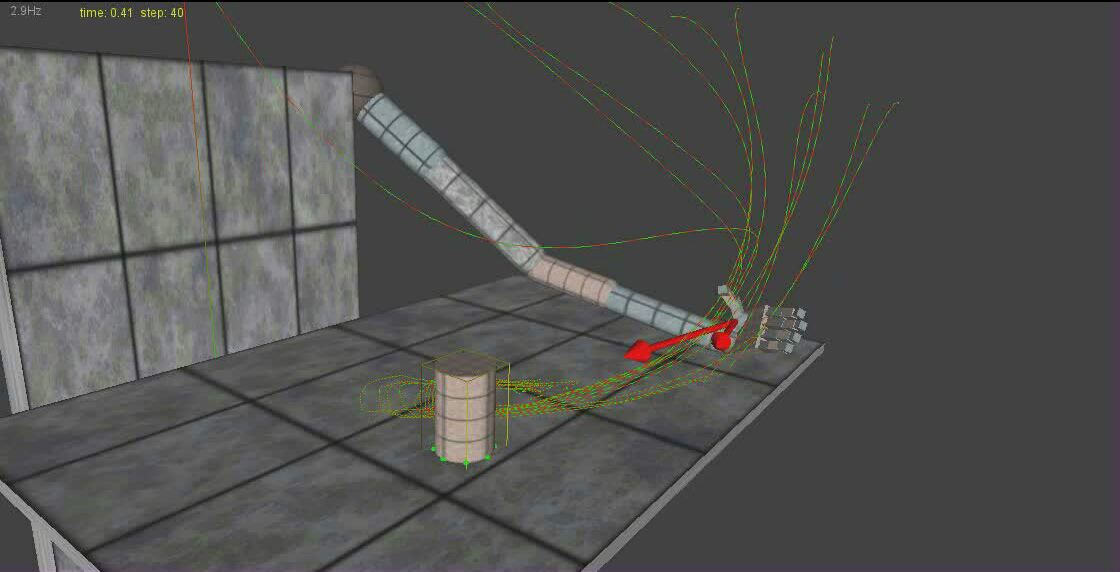

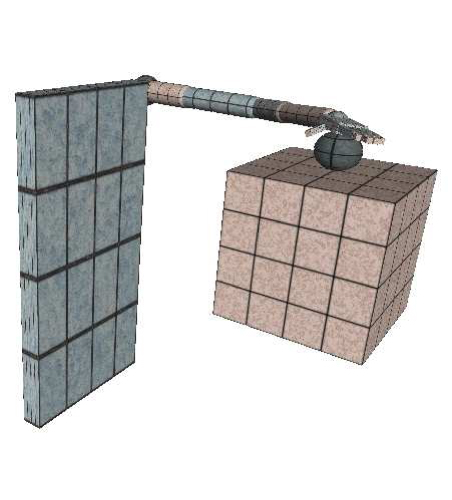

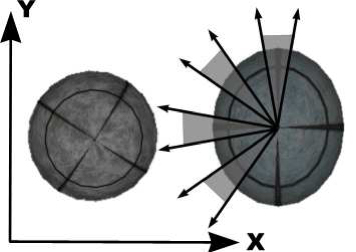

In a second series of experiments (Tuci, Massera & Nolfi, 2009a, 2009b), we studied how a neuro-controlled anthropomorphic robotic arm, equipped with coarse-grained tactile sensors, can perceptually categorise objects with different shapes located over a table (Figure 5).

Figure 5. The robot and the environment. The top pictures show the two relative positions (with respect to the robot) in which the objects and the table might be located during the training process. The bottom picture show the spherical and ellipsoid objects to be discriminated (the radius of the sphere is 2.5 cm, the radii of the ellipsoid are 2.5, 3.0 and 2.5 cm). The arrows in the bottom-left picture indicate the intervals within which the initial rotation of the ellipsoid is set during the training process.

As in the case of the experiments reported in the previous section, the robot is provided with an anthropomorphic robotic arm with 7 DOFs, a robotic hand with 20 DOFs, proprioceptive and touch sensors distributed within the arm and the hand and a simple vision system able to detect the relative position of the object (see Figure 2).

The 7 joints of the arm, that control the 7 corresponding DOFs, are actuated by 14 simulated antagonist muscles implemented accordingly to the Hill's muscle model. The 20 DOF of the hand, instead, are actuated through 2 velocity-proportional controllers that determine the extension/flexion of all the fingers and the supination/pronation of the thumb.

Seven arm propriosensors encode the current angles of the seven corresponding DOFs located on the arm and on the wrist. Five hand propriosensors encode the current extension/flexion of the five corresponding fingers. Ten tactile sensors binarily detect whether the palm, the second phalange of the thumb, and the first and third phalanges of the other four fingers are in physical contact with another object.

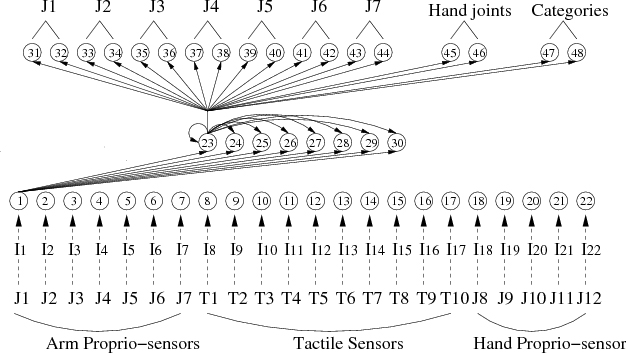

The architecture of the robots' neural controller (Figure 6) includes 22 sensory neurons (that encode the current state of the corresponding sensors), 8 internal leaky neurons, and 16 motor neurons (that control the 16 corresponding actuators), 2 categorization outputs that are used by the robot to indicate the category of the current object.

Figure 6. The architecture of the neural controller. Internal neurons are fully connected. Additionally, each internal neuron receives one incoming synapse from each sensory neuron. Each motor neuron receives one incoming synapse from each internal neuron. There are no direct connections between sensory and motor neurons. All neurons are leaky integrators. Sensory neurons are also provided with a gain factor. Dashed line arrows indicate the correspondences between joints and tactile sensors and input neurons.

The weights of the connections and the biases, time constant and gain factor of neurons are encoded in free parameters and adapted through an evolutionary process.

In each trial k, an agent is rewarded for the ability to produce different categorization outputs for spherical and ellipsoid objects at the end of the trial and for the ability to touch the objects with the palm. The latter components of the fitness .

During evolution, each genotype is translated into an arm controller and evaluated 8 times in position A and 8 times in position B (Figure 5, top-left and top-right, respectively) for a total of 16 trials. For each position, the arm experiences 4 times the ellipsoid and 4 times the sphere. In the four trials in which the environment includes an ellipsoid object, the orientation of the ellipsoid is randomly set within one of the four corresponding sectors shown in Figure 5 (bottom-left). Each trial last 400 time steps corresponding to 4s.

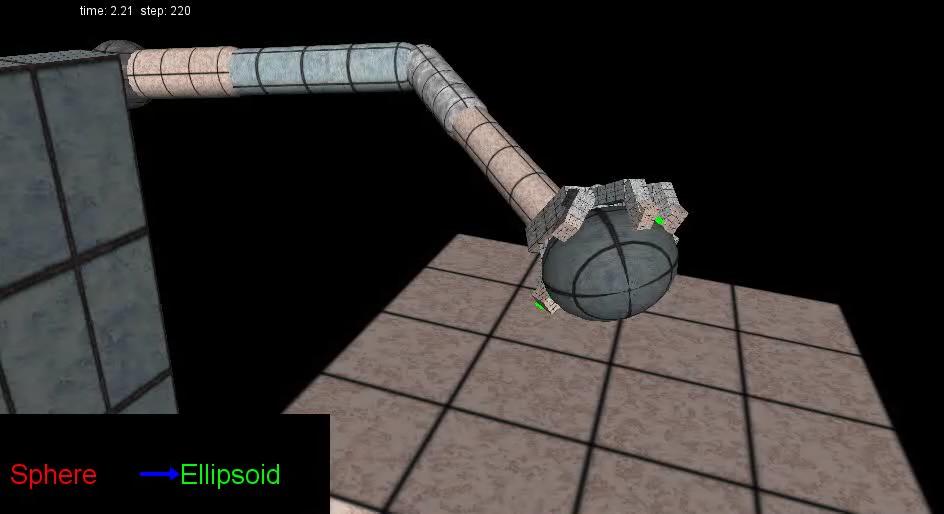

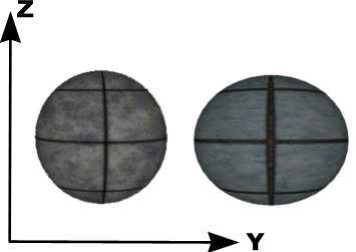

The analysis of the obtained results indicates that the best robots are indeed capable of developing an ability to effectively categorise the shape of the objects despite the high similarities between the two types of objects, the difficulty of effectively controlling a body with many DoFs, and the need to master the effects produced by gravity, inertia, collisions etc. You can observe the behaviour and the categorization answers produced by two of the best evolved individuals in the videos included in Figure 7. For more information see also the supplementary material.

Figure 7. The behaviour and the categorization outputs produced by two of the best evolved individuals. The arrow in the bottom-left corner represent the state of the two categorization outputs of the robot. As you can see, initially the robot always produces the same default answer and then maintain or change the answer on the basis of the information gathered during the interaction with the objects.

More specifically, the best individuals display an ability to correctly categorise the objects located in different positions and orientations already experienced during evolution, as well as an ability to generalise their skill to objects positions and orientations never experienced during the evolutionary process.

The analysis of the best evolved agents indicates that one fundamental skill that allows the agents to solve the categorisation problem consists in the ability to interact with the external environment and to modify the environment itself so to experience sensory states which are as differentiated as possible for different categorical contexts. This result demonstrates the importance of sensory-motor coordination, and more specifically of the active nature of situated categorisation, already highlighted in previous studies. On the other hand, the fact that sensory-motor coordination does not allow the agent to later experience fully discriminative stimuli demonstrate how in some cases sensory-motor coordination should be complemented by additional mechanisms. Such mechanism, in the case of the best evolved individuals, consists in an ability to integrate the information provided by sequence of sensory stimuli over time. More specifically, we brought evidence showing that the best robots categorise the current object as soon as they experience useful regularities and that the categorisation process is realised during a significant period of time (i.e., about 50 time steps) in which the agent keeps using the experienced evidence to confirm and reinforce the current tentative decision or to change it.

The analysis of the role played by different sensory channels indicates that categorisation process in the best evolved individuals is primarily based on tactile sensors and secondarily on hand and arm proprioceptive sensors (with arm proprioceptive sensors playing no role in most of the cases).

It is interesting to note that at least one of the best evolved agents does not only display an ability to exploit all relevant information but also an ability to fuse information coming from different sensory modalities in order to maximise the chance to take the appropriate categorisation decision. More specifically, the ability to fuse the information provided by the tactile and hand proprioceptive sensors, for objects located in position B, allows the robot to correctly categorise the shape of the object in the majority of the cases even when one of the two sources of information is corrupted.

Finally, the analysis of the motor and categorization behaviour displayed by evolved agents indicates that categorization is realized through a dynamical process extended over a significant amount of time. Indeed, the interaction between the robot and the environment can be divided into three temporal phases: (i) an initial phase whose upper bound can be approximately fixed at time step 250, in which the categorisation process begins but in which the categorisation answer produced by the agent is still reversible; (ii) an intermediate phase whose upper bound can be approximately fixed at time step 350, in which very often a categorisation decision is taken on the basis of previously experienced evidences; and (iii) a final phase in which the previous decision (which is now irreversible) is maintained.

References

Massera G., A. Cangelosi, Nolfi S. (2007). Evolution of Prehension Ability in an Anthropomorphic Neurorobotic Arm, Frontiers in Neurobotics, 1(4):1-9. pdf, electronic supplementary material

Tuci E., Massera G., Nolfi S. (2009a). On the dynamics of active categorisation of different objects shape through tactile sensors. Proceedings of the 10th European Conference of Artificial Life (ECAL 2009).

Tuci E., Massera G., Nolfi S. (2009b). Active categorical perception in an evolved anthropomorphic robotic arm. IEEE International Conference on Evolutionary Computation (CEC), special session on Evolutionary Robotics. pdf